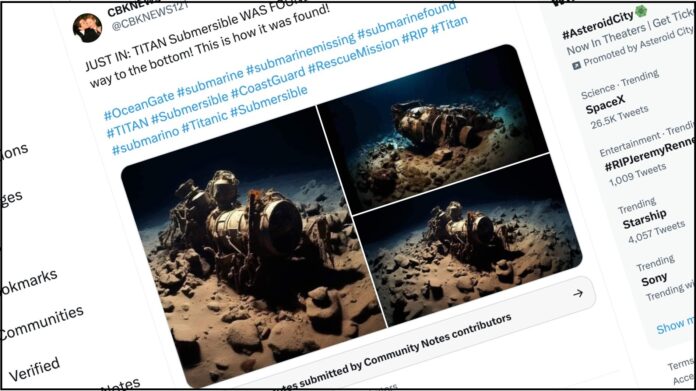

Scam accounts are taking advantage of the Titan submersible implosion to spread fake, AI-generated images that claim to show debris on the seafloor.

On Thursday, the US Coast Guard announced(Opens in a new window) it had found some wreckage of the Titan, with all five passengers onboard presumed dead. No official photos of the debris have been released, but that didn’t stop some people from circulating fake, AI-generated pics.

Starting on Thursday, several accounts on Twitter(Opens in a new window) and Facebook(Opens in a new window) shared photos that claimed to show the Titan’s debris at the bottom of the ocean.

One of the AI-generated images of the wreckage.

(Credit: Twitter)

But if you look closely, the images are seriously off. For one, the wreckage looks more like a destroyed rocket engine than the submersible. The images also appear too clear and perfectly lit when the wreckage is deep underwater where there is no light. On Thursday, the US Coast Guard said it had found the “tail section” of the submersible off the bow of the sunken Titanic, which is currently 12,500 below sea level.

The actual OceanGate Titan submersible

(Credit: Getty Images)

Other discrepancies include how a couple pictures appear to show the sea’s surface at the top, and coral growing over the wreckage when the Titan likely imploded only days ago.

Another AI-generated photo shows the sea surface at the top. The wreckage is also strangely covered in coral.

(Credit: Twitter)

Meanwhile, a separate image that shows shoes within the debris isn’t AI-generated. Instead, it’s an actual photo(Opens in a new window) taken at the site of the sunken Titanic almost 20 years ago.

Despite the apparent flaws, the suspected AI-generated images received up to 480,000(Opens in a new window) views from users on Twitter. In addition, some of the accounts pushing the images have the blue checkmark, which used to denote legitimacy, but can now be purchased for just a few bucks.

Recommended by Our Editors

Of note: One of the accounts spreading the fake images features John F. Kennedy, Jr. and his wife Carolyn Bessette as its profile photo, an obvious sign the account is associated with conspiracy groups like Q-Anon(Opens in a new window) rather than a legitimate news source.

Some users who noticed the fakery are calling on the Twitter accounts to pull the images. At the same time, Twitter’s Community Notes feature has been slapped on many of the posts in an effort to debunk the misinformation. Still, the issue underscores how AI-generated pictures can easily fill a void in the absence of real photos of a news event.

The origins of the suspected AI-generated images aren’t totally clear. But it looks like three of them came from a parody account(Opens in a new window) called the “Prince of Deepfakes,” who’s used the Midjourney AI-image generator before.

Get Our Best Stories!

Sign up for What’s New Now to get our top stories delivered to your inbox every morning.

This newsletter may contain advertising, deals, or affiliate links. Subscribing to a newsletter indicates your consent to our Terms of Use and Privacy Policy. You may unsubscribe from the newsletters at any time.

Visits: 0