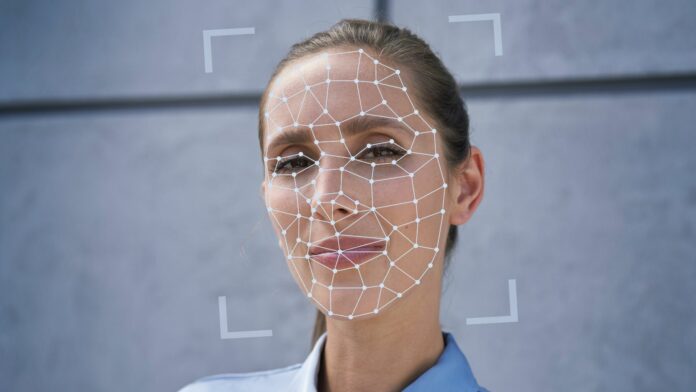

Intel has introduced what it claims(Opens in a new window) is the world’s very first real-time deepfake detector. FakeCatcher is said to have a 96% accuracy rate and works by analyzing blood flow in video pixels using innovative photoplethysmography (PPG(Opens in a new window)).

Ilke Demir, the senior staff research scientist in Intel Labs, designed the FakeCatcher detector in collaboration with Umur Ciftci from the State University of New York at Binghamton. The real-time detector uses Intel hardware and software and runs on a server and interfaces through a web-based platform.

FakeCatcher is different from most deep learning-based detectors in the fact that it looks for authentic clues in real videos rather than looking at raw data to spot signs of inauthenticity. Its method is based on PPG, a method used to measure the amount of light that is either absorbed or reflected by blood vessels in living tissue. When our hearts pump blood, veins change in color and these signals are picked up by the technology to establish whether a video is fake or not.

Speaking to VentureBeat, Demir said(Opens in a new window) that FakeCatcher is unique because PPG signals “have not been applied to the deep fake problem before.” The detector collects these signals from 32 locations on the face before algorithms translate them into spatiotemporal maps before a decision is made as to whether a video is real or fake.

Deepfake videos are a growing threat across the globe. According to Gartner(Opens in a new window), companies will spend approaching $188 billion in cybersecurity solutions to counter them. At present, detection apps typically require videos to be uploaded for analysis and results can take hours.

Intel says that the detector could be leveraged by social media platforms to prevent users from uploading harmful deepfakes, while news organizations could use it to prevent accidentally publishing manipulated videos.

Recommended by Our Editors

Deepfakes have targeted prominent political figures and celebrities. Last month, a viral altered TikTok(Opens in a new window) was made to look like Joe Biden was singing the children’s song Baby Shark instead of the national anthem.

Efforts towards detecting deepfakes have also run into problems regarding racial bias in the datasets used to train them. According to a 2021 study(Opens in a new window) from the University of Southern California, some detectors showed up to a 10.7% difference in error rate depending on the racial group.

Like What You’re Reading?

Sign up for SecurityWatch newsletter for our top privacy and security stories delivered right to your inbox.

This newsletter may contain advertising, deals, or affiliate links. Subscribing to a newsletter indicates your consent to our Terms of Use and Privacy Policy. You may unsubscribe from the newsletters at any time.

Hits: 0