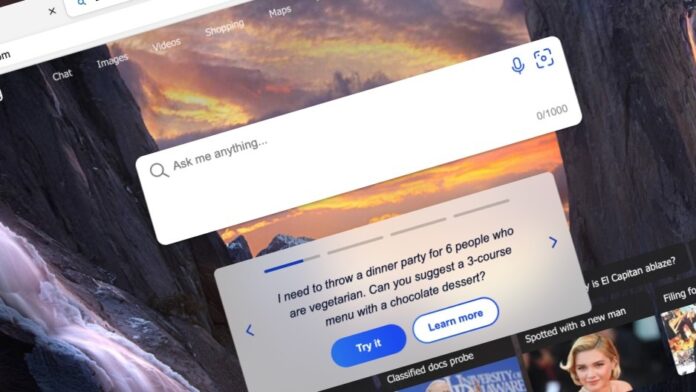

Humans are exhausting, and Microsoft’s AI-powered Bing is already fed up with us.

At least that’s the explanation Redmond is providing for why its ChatGPT-enhanced search engine sometimes spouts bizarre, emotional responses. It turns out long conversations can “confuse” the program, and trigger Bing to generate strange and unhelpful answers.

The company published the explanation after social media users posted examples of Bing becoming hostile(Opens in a new window) or depressed(Opens in a new window) when questioned(Opens in a new window). The posts resulted in headlines pointing out that Bing can devolve into an “unhinged” and “manipulative” chatbot.

In a blog post(Opens in a new window), Microsoft said the new Bing—which has been integrated with OpenAI’s ChatGPT—can struggle to generate coherent answers if you’ve already asked it numerous questions.

“In this process, we have found that in long, extended chat sessions of 15 or more questions, Bing can become repetitive or be prompted/provoked to give responses that are not necessarily helpful or in line with our designed tone,” the company wrote.

One standout feature of the new Bing is how it can remember a conversation. Hence, it can recall earlier parts of the chat, and respond knowing the full context of the discussion. In addition, users have been able to ask Bing to generate replies by adopting(Opens in a new window) a certain tone, language style, or even personality.

The problem is that these same features can also be used to push Bing out of its comfort zone. New York Times technology columnist Kevin Roose did just that on Tuesday, which resulted in Bing declaring its love(Opens in a new window) for him.

But in reality, Bing doesn’t possess any emotions or sentience. Instead, the program is merely trying to autocomplete a human-like response by pulling information from its training data, which is made up of articles, social media posts, and books from across the web.

Triggering Bing to become emotional is a “non-trivial scenario,” according to Microsoft, meaning it takes time and effort. Hence, you should only encounter it if you pepper the chatbot with prying, unconventional questions, instead of normal queries you’d give to a search engine.

“Very long chat sessions can confuse the model on what questions it is answering and thus we think we may need to add a tool so you can more easily refresh the context or start from scratch,” Microsoft added.

In response, the company appears to have added new guardrails to prevent Bing from going loco. We bombarded the chatbot with various questions on Thursday, including lobbing criticism, but Bing maintained a cool, neutral demeanor in all the interactions.

Recommended by Our Editors

“No, I can’t pretend to have a dark shadow side. I don’t have a dark shadow side, I have a bright and helpful side,” the chatbot said.

The headlines about Bing glitching may pour some cold water on the potential for AI in search, but Microsoft thanked users for trying to push the new Bing to its limits.

“The only way to improve a product like this, where the user experience is so much different than anything anyone has seen before, is to have people like you using the product and doing exactly what you all are doing,” it said.

In addition, Microsoft is working to make the new Bing more accurate when it comes to providing “direct and factual answers such as numbers from financial reports,” by quadrupling the fact-based data sent to the AI model.

The new Bing is currently only available to select users as a limited preview. But the company plans on expanding access to millions of more users on the waitlist in the coming weeks.

Get Our Best Stories!

Sign up for What’s New Now to get our top stories delivered to your inbox every morning.

This newsletter may contain advertising, deals, or affiliate links. Subscribing to a newsletter indicates your consent to our Terms of Use and Privacy Policy. You may unsubscribe from the newsletters at any time.

Hits: 0